rCore第八章

用户线程

数据结构

use std::arch::asm;

const DEFAULT_STACK_SIZE: usize = 1024 * 1024 * 2;

const MAX_THREADS: usize = 4;

static mut RUNTIME: usize = 0;

声明一些全局变量。

pub struct Runtime {

threads: Vec<Thread>,

current: usize,

}

#[derive(PartialEq, Eq, Debug)]

enum State {

Available,

Running,

Ready,

}

struct Thread {

id: usize,

stack: Vec<u8>,

ctx: ThreadContext,

state: State,

}

#[derive(Debug, Default)]

#[repr(C)]

struct ThreadContext {

rsp: u64,

r15: u64,

r14: u64,

r13: u64,

r12: u64,

rbx: u64,

rbp: u64,

}

声明用户态线程所需要的数据结构,由于用户态线程对于内核是不可知的,我们需要一个Runtime进行调度,这里Runtime中维护了一个Thread队列。由于用户态线程除了单独的栈,调用和普通函数一样,我们在保存寄存器状态时,只需要保存callee保存寄存器。

Runtime

在运行用户态线程之前,我们需要对运行时进行初始化。

impl Thread {

fn new(id: usize) -> Self {

Thread {

id,

stack: vec![0_u8; DEFAULT_STACK_SIZE],

ctx: ThreadContext::default(),

state: State:;Available,

}

}

}

impl Runtime {

pub fn new() -> Self {

let base_therad = Thread {

id: 0,

stack: vec![0_u8; DEFAULT_STACK_SIZE],

ctx: ThreadContext::default(),

state: State::Running,

}

let mut threads = vec![base_thread];

let mut available_threads: Vec<Thread> = (1..MAX_THREADS)

.map(|i| Thread::new(i))

.collect();

threads.append(&mut available_threads);

Runtime {

threads,

current: 0,

}

}

pub fn init(&self) {

unsafe {

let r_ptr: *const Runtime = self;

RUNTIME = r_ptr as usize;

}

}

}

这里初始化了主线程,这个线程用于调度其他的线程,是一开始默认调度的线程。同时将所有线程模板加入到队列中。运行时就是这个队列和当前线程号组成的。

impl Runtime {

#[inline(never)]

fn t_yield(&mut self) -> bool {

let mut pos = self.current;

while self.threads[pos].state != State::Ready {

pos += 1;

if pos == self.threads.len() {

pos = 0;

}

if pos == self.current {

return false;

}

}

if self.threads[self.current].state != State::Available {

self.threads[self.current].state = State::Ready;

}

self.threads[pos].state = State::Running;

let old_pos = self.current;

self.current = pos;

unsafe {

let old: *mut ThreadContext = &mut self.threads[old_pos].ctx;

let new: *const ThreadContext = &self.threads[pos].ctx;

asm!("call switch", in("rdi") old, in("rsi") new, clobber_abi("C"));

}

self.threads.len() > 0

}

}

首先介绍t_yield,这个方法会遍历thread队列,找到一个状态是Ready的线程,我们会将自己的current的状态设置为Ready。同时将我们选择的线程状态设置为running, 之后交换新旧线程的上下文。

pub fn spawn(&mut self, f: fn()) {

let available = self

.threads

.iter_mut()

.find(|t| t.state == State:;Available)

.expect("no available thread.")

let size = available.stack.len();

unsafe {

let s_ptr = available.stack.as_mut_ptr().offset(size as isize);

let s_ptr = (s_ptr as usize as !15) as *mut u8;

std::ptr::write(s_ptr.offset(-16) as *mut u64, guard as u64);

std::ptr::write(s_ptr.offset(-24) as *mut u64, skip as u64);

std::ptr::write(s_ptr.offset(-32) as *mut u64, f as u64);

available.ctx.rsp = s_ptr.offset(-32) as u64;

}

available.state = State::Ready;

}

#[naked]

unsafe extern "C" fn skip() {

asm!("ret", options(noreturn))

}

fn guard() {

unsafe {

let rt_ptr = RUNTIME as *mut Runtime;

(*rt_ptr).t_return();

};

}

这里spawn会遍历队列,找到一个没有被占位的Thread结构体,往线程对应的栈中压入f、skip、guard函数,之后将上下文中的sp指针指向f的位置,那么这个Thread就被创建完成加入到了队列中。

当所有的线程创建完毕时,在主线程中会调用run方法,

impl runtime {

pub fn run(&mut self) -> ! {

while self.t_yield() {}

std::process::exit(0);

}

fn t_return(&mut self) {

if self.current != 0 {

self.threads[self.current].state = State::Available;

self.t_yield();

}

}

}

run中将会不断地调用我们如下分析过的t_yield方法。

#[naked]

#[no_mangle]

unsafe extern "C" fn switch() {

asm!(

"mov [rdi + 0x00], rsp",

"mov [rdi + 0x08], r15",

"mov [rdi + 0x10], r14",

"mov [rdi + 0x18], r13",

"mov [rdi + 0x20], r12",

"mov [rdi + 0x28], rbx",

"mov [rdi + 0x30], rbp",

"mov rsp, [rsi + 0x00]",

"mov r15, [rsi + 0x08]",

"mov r14, [rsi + 0x10]",

"mov r13, [rsi + 0x18]",

"mov r12, [rsi + 0x20]",

"mov rbx, [rsi + 0x28]",

"mov rbp, [rsi + 0x30]",

"ret", options(noreturn)

);

}

如上是switch函数,我们需要交换所有的线程中的callee保存寄存器。

内核线程

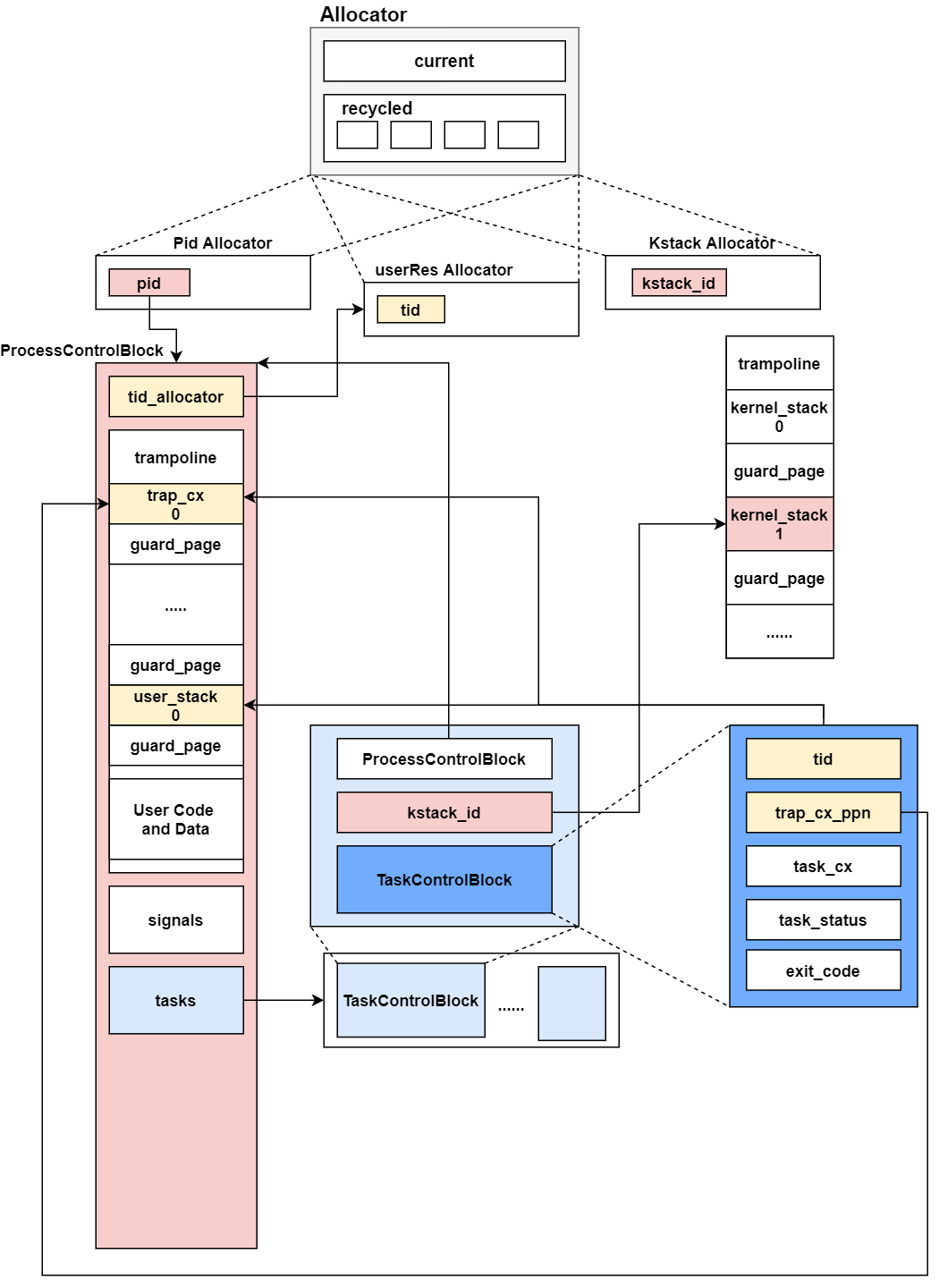

这里存在三种资源分配器,一个是pid,kstack_id和userRes_id, 由于userRes在每个pid分配的资源中进行再分配,所以userRes从0号开始分配。同时kstack_id则是所有进程的线程是同等,需要一个跨越不同进程的分配器,让不同的进程中线程分配的内核栈空间不重合。

对于一个ProcessControlBlock 其中根据上面的描述,它有一个独立的tid_allocator来管理线程号,还有一个地址空间映射,进程中的所有的线程共享这个地址空间。同时tasks队列中有一些线程控制块TaskControlBlock, 线程控制块中保存了一些线程独有的信息,如tid, task_cx, trap_cx_ppn。

当我们创建一个进程时,代码如下:

pub fn new(elf_data: &[u8]) -> Arc<Self> {

let (memory_set, ustack_base, entry_point) = MemorySet::from_elf(elf_data);

let pid_handle = pid_alloc();

let process = Arc::new(Self {

pid: pid_handle,

inner: unsafe {

UPSafeCell::new(ProcessControlBlockInner {

is_zombie: false,

memory_set: None,

parent: None,

children: Vec::new(),

exit_code: 0,

fd_table: vec![

Some(Arc::new(Stdin)),

Some(Arc::new(Stdout)),

Some(Arc::new(Stdout)),

],

signals: SignalFlags::empty(),

tasks: Vec::new(),

task_res_allocator: RecycleAllocator::new(),

mutex_list: Vec::new(),

semaphore_list: Vec::new(),

condvar_list: Vec::new(),

})

},

});

let task = Arc::new(TaskControlBlock::new(

Arc::clone(&process),

ustack_base,

true,

));

let task_inner = task.inner_exclusive_access();

let trap_cx = task_inner.get_trap_cx();

let ustack_top = task_inner.res.as_ref().unwrap().ustack_top();

let kstack_top = task.kstack.get_top();

drop(task_inner);

*trap_cx = TrapContext::app_init_context(

entry_point,

ustack_top,

KERNEL_SPACE.exclusive_access().token(),

kstack_top,

trap_handler as usize,

);

let mut process_inner = process.inner_exclusive_access();

process_inner.tasks.push(Some(Arc::clone(&task)));

drop(process_inner);

insert_into_pid2process(process.getpid(), Arc::clone(&process));

add_task(task);

process

}

根据ELF文件的内容获取到地址空间memory_set, 接着申请一个pid,根据这些结构申请一个process control Block结构,这个结构中有memory_set, 父子关系,exit_code, 文件描述符表,信号队列,线程队列,线程号分配器,互斥锁,信号量,条件变量。

其中部分资源需要进行初始化,比如线程队列,我们需要压入一个主线程,它的线程号为0,我们需要设置线程的入口地址,栈空间,satp, 陷入处理函数。最后将这个线程压入队列,同时将这个线程加入整个调度队列中,最后返回这个process

pub fn exec(self: &Arc<Self>, elf_data: &[u8], args: Vec<String>) {

assert_eq!(self.inner_exclusive_access().thread_count(), 1);

let (memory_set, ustack_base, entry_point) = MemorySet::from_elf(elf_data);

let new_token = memory_set.token();

self.inner_exclusive_access().memory_set = memory_set;

let task = self.inner_exclusive_access().get_task(0);

let mut task_inner = task.inner_exclusive_access();

task_inner.res.as_mut().unwrap().ustack_base = ustack_base;

task_inner.res.as_mut().unwrap().alloc_user_res();

task_inner.trap_cx_ppn = task_inner.res.as_mut().unwrap().trap_cx_ppn();

let mut user_sp = task_inner.res.as_mut().unwrap().ustack_top();

user_sp -= (arg.len() + 1) * core::mem::size_of::<usize>();

let mut argv: Vec<_> = (0..=args.len())

.map(|arg| {

translated_refmut(

new_token,

(argv_base + arg * core::mem::size_of::<usize>()) as *mut usize,

)

})

.collect();

*argv[args.len()] = 0;

for i in 0..args.len() {

user_sp -= args[i].len() + 1;

*argv[i] = user_sp;

let mut p = user_sp;

for c in args[i].as_bytes() {

*translated_refmut(new_token, p as *mut u8) = *c;

p += 1;

}

*translated_refmut(new_token, p as *mut u8) = 0;

}

user_sp -= user_sp % core::mem::size_of::<usize>();

let mut trap_cx = TrapContext::app_init_context(

entry_point,

user_sp,

KERNEL_SPACE.exclusive_access().token(),

task.kstack.get_top(),

trap_handler as usize,

);

trap_cx.x[10] = args.len();

trap_cx.x[11] = argv_base;

*task_inner.get_trap_cx() = trap_cx;

}

对于执行一个ELF文件,我们通过要解析ELF文件后获取memory_set, 替换原来的memory_set, 之后替换原来主线程的用户栈(栈底的位置),分配tid号同时分配对应的资源,设置新的trap_cx_ppn。压入exec的传给用户进程的参数。最后我们要对刚才的trap_cx_ppn处的内容进行初始化,设置完返回值后,返回。

//对于fork我们只支持只有一个线程的进程

pub fn fork(self: &Arc<Self>) -> Arc<Self> {

let mut parent = self.inner_exclusive_access();

assert_eq!(parent.thread_count(), 1);

let memory_set = MemorySet::from_existed_user(&parent.memory_set);

let pid = pid_alloc();

let mut new_fd_table: Vec<Option<Arc<dyn File + Send + Sync>>> = Vec::new();

for fd in parent.fd_table.iter() {

if let Some(file) = fd {

new_fd_table.push(Some(file.clone()));

} else {

new_fd_table.push(None);

}

}

let child = Arc::new(Self {

pid,

inner: unsafe {

UPSafeCell::new(ProcessControlBlockInner{

is_zombie: false,

memory_set,

parent: Some(Arc::downgrade(self)),

children: Vec::new(),

exit_code: 0,

fd_table: new_fd_table,

signals: SignalFlags::empty(),

tasks: Vec::new(),

tasks_res_allocator: RecycleAllocator::new(),

mutex_list: Vec::new(),

semaphore_list: Vec::new(),

condvar_list: Vec::new(),

})

},

});

parent.children.push(Arc::clone(&child));

let task = Arc::new(TaskControlBlock::new(

Arc::clone(&child),

parent

.get_task(0)

.inner_exclusvie_access()

.res

.as_ref()

.unwrap()

.ustack_base(),

false,

));

let mut child_inner = child.inner_exclusive_access();

child_inner.tasks.push(Some(Arc::clone(&task)));

drop(child_inner);

let task_inner = task.inner_exclusive_access();

let trap_cx = task_inner.get_trap_cx();

trap_cx.kernel_sp = task.kstack.get_top();

drop(task_inner);

insert_into_pid2process(child.getpid(), Arc::clone(&child));

add_task(task);

child

}

首先复制父进程的地址空间,申请一个pid, 之后复制父进程的文件描述符表。接着我们需要压入子进程。和上面的函数类似,我们需要为我们的进程创建主线程,将这个线程压入process control block的线程队列中。最后将这个进程的主线程压入到调度队列中。

但是在加入多线程后更加复杂的是退出的功能,我们要对退出的是主线程的情况进行区分。

pub fn exit_current_and_run_next(exit_code: i32) {

let task = take_current_task().unwrap();

let mut task_inner = task.inner_exclusive_access();

let process = task.process.upgrade().unwrap();

let tid = task_inner.res.as_ref().unwrap().tid;

task_inner.exit_code = Some(exit_code);

task_inner.res = None;

drop(task_inner);

drop(task);

if tid == 0 {

let pid = process.getpid();

if pid == IDLE_PID {

println!(

"[kernel] Idle process exit with exit_code {} ...",

exit_code

);

if exit_code != 0 {

crate::board::QEMU_EXIT_HANDLE.exit_failure();

} else {

crate::board::QEMU_EXIT_HANDLE.exit_success();

}

}

remove_from_pid2process(pid);

let mut process_inner = process.inner_exclusive_access();

process_inner.is_zombie = true;

process_inner.exit_code = exit_code;

{

let mut initproc_inner = INITPROC.inner_exclusive_access();

for child in process_inner.children.iter() {

child.inner_exclusive_access().parent = Some(Arc::downgrade(&INITPROC));

initproc_inner.children.push(child.clone());

}

}

let mut recycle_res = Vec::<TaskUserRes>>::new();

for task in process_inner.tasks.iter().filter(|t| t.is_some()) {

let task = task.as_ref().unwrap();

remove_inactive_task(Arc::clone(&task));

if let Some(res) = task_inner.res.take() {

recycle_res.push(res);

}

}

drop(process_inner);

recycle_res.clear();

let mut process_inner = process.inner_exclusive_access();

process_inner.children.clear();

process_inner.memory_set.recycle_data_pages();

process_inner.fd_table.clear();

process_inner.tasks.clear();

}

drop(process);

let mut _unused = TaskContext::zero_init();

schedule(&mut _unused as *mut _);

}

首先获取当前线程的tid, 设置线程的exit_code, 之后如果tid为0,我们需要设置进程的exit_code, 之后将子进程都转移到init进程下。接下来我们需要回收线程的资源。我们生成一个TaskUserRes, 接着遍历进程的task队列,将这些线程移除队列,并回收对应的线程号。

最后将文件描述表,子进程表,页资源回收。调度下一个线程。

接下来是系统调用是如何实现的代码:

pub fn sys_thread_create(entry: usize, arg: usize) -> isize {

let task = current_task().unwrap();

let process = task.process.upgrade().unwrap();

let new_task = Arc::new(TaskControlBlock::new(

task.inner_exclusive_access()

.res

.as_ref()

.unwrap()

.ustack_base,

true,

));

add_task(Arc::clone(&new_task));

let new_task_inner = new_task.inner_exclusive_access();

let new_task_res = new_task_inner.res.as_ref().unwrap();

let new_task_tid = new_task_res.tid;

let mut process_inner = process.inner_exclusive_access();

let tasks = &mut process_inner.tasks;

while tasks.len() < new_task_tid + 1 {

tasks.push(None)

}

tasks[new_task_tid] = Some(Arc::clone(&new_task));

let new_task_trap_cx = new_task_inner.get_trap_cx();

*new_task_trap_cx = TrapContetx::app_init_context(

entry,

new_task_res.ustack_top(),

kernel_token(),

new_task.kstack.get_top(),

trap_handler as usize,

)

(*new_task_trap_cx.x[10]) = arg;

new_task_tid as usize

}

线程的创建我们需要获取现在的父进程,之后创建一个空的线程结构,分配一个tid,将这个线程加入进程的队列中,接下来将这个线程的trap上下文设置好就可以返回。

pub fn sys_waittid(tid: usize) -> i32 {

let tasks = current_task().unwrap();

let process = tasks.process.upgrade().unwrap();

let task_inner = task.inner_exclusive_access();

let mut process_inner = processor.inner_exclusive_access();

if task_inner.res.as_ref().unwrap().tid == tid {

return -1;

}

let mut exit_code: Option<i32> = None;

let waited_task = processor_inner.tasks[tid].as_ref();

if let Some(waited_task) = waited_task {

if let Some(waited_exit_code) = waited_task.inner_exclusive_access().exit_code {

exit_code = Some(waited_exit_code);

}

} else {

// waited thread does not exist

return -1;

}

if let Some(exit_code) = exit_code {

// dealloc the exited thread

process_inner.tasks[tid] = None;

exit_code

} else {

// waited thread has not exited

-2

}

}

获取进程控制块后,会检查子进程中的exit_code,进行返回。

同步互斥

这里互斥锁的实现是一个自旋锁或者阻塞锁:

pub struct MutexSpin {

locked: UPSafeCell<bool>,

}

impl MutexSpin {

pub fn new() -> Self {

Self {

locked unsafe { UPSafeCell}

}

}

}

impl Mutex for MutexSpin {

fn lock(&self) {

loop {

let mut locked = self.locked.exclusive_access();

if *locked {

drop(locked);

suspend_current_and_run_next();

continue;

} else {

*locked = true;

return ;

}

}

}

fn unlock(&self) {

let mut locked = self.locked.exclusive_access();

*locked = false;

}

}

对于spin形式的锁,会直接判断这个锁是否已经获取,如果已经被其他的线程获取了,那么就调度其他线程进行执行。而MutexBlocking内部维护了一个队列:

pub struct MutexBlocking {

inner: UPSafeCell<MutexBlockingInner>,

}

pub struct MutexBlockingIner {

locked: bool,

wait_queue: VecDeque<Arc<TaskControlBlock>>,

}

impl MutexBlocking {

pub fn new() -> Self {

Self {

inner: unsafe {

UPSafeCell::new(MutexBlockingInner{

locked: false,

wait_queue: VecDeque::new(),

})

},

}

}

}

impl Mutex for MutexBlocking {

fn lock(&self) {

let mut mutex_inner = self.inner.exclusive_access();

if mutex_inner.locked {

mutex_inner.wait_queue.push(current_task().unwrap());

drop(mutex_inner);

block_current_and_run_next();

} else {

mutex_inner.locked = true;

}

}

fn unlock(&self) {

let mut mutex_inner = self.inner.exclusive_access();

assert!(mutex_inner.locked);

if let Some(waking_task) = mutex_inner.wait_queue.pop_front() {

wakeup_task(waking_task);

} else {

mutex_inner.locked = false;

}

}

}

这里可以发现调用的是block_current_and_run_next, 所说这个线程将被拿出调度队列,于是内核调度时就不会考虑这个线程。而对锁解锁时,就可以拿出一个wait_queue中的阻塞线程加入到调度队列中。

下面是信号量的实现,也十分类似:

pub struct Semaphore {

pub inner: UPSafeCell<Semaphore>,

}

pub struct SemaphoreInner {

pub count: isize,

pub wait_queue: VecDeque<Arc<TaskControlBlock>>,

}

impl Semaphore {

pub fn new(res_count: usize) -> Self {

Self {

inner: unsafe {

UPSafeCell:new(SemaphoreInner {

count: res_count as isize,

wait_queue: VecDeque::new(),

})

},

}

}

pub fn up(&self) {

let mut inner = self.inner.exclusive_access();

inner.count += 1;

if inner.count <= 0 {

if let Some(task) = inner.wait_queue.pop_front() {

wakeup_task(task);

}

}

}

pub fn down(&self) {

let mut inner = self.inner.exclusive_access();

inner.count -= 1;

if inner.count < 0 {

inner.wait_queue.push_back(current_task().unwrap());

drop(inner);

block_current_and_run_next();

}

}

}

同样我们维护了一个队列,相当于对于mutexBlock进行了扩展, 只要信号量小于0,就要压入队列中。

下面是条件变量:

pub struct Condvar {

pub inner: UPSafeCell<CondvarInner>,

}

pub struct CondvarInner {

pub wait_queue: VecDeque<Arc<TaskControlBlock>>,

}

impl Condvar {

pub fn new() -> Self {

inner: unsafe {

UPSafeCell::new(CondvarInner {

wait_queue: VecDeque::new(),

})

},

}

pub fn signal(&self) {

let mut inner = self.inner.exclusive_access();

if let Some(task) = inner.wait_queue.pop_front() {

wakeup_task(task);

}

}

pub fn wait(&self, mutex: Arc<dyn Mutex>) {

mutex.unlock();

let mut inner = self.inner.exclusive_access();

inner.wait_queue.push_back(current_task().unwrap());

drop(inner);

block_current_and_run_next();

mutex.lock();

}

}

这里采用比较常用的方式,使用mutex和阻塞实现条件变量。

pub fn sys_mutex_create(blocking: bool) -> isize {

let process = current_process();

let mutex: Option<Arc<dyn Mutex>> = if !blocking {

Some(Arc::new(MutexSpin::new()))

} else {

Some(Arc::new(MutexBlocking::new()))

};

let mut process_inner = process.inner_exclusive_access();

if let Some(id) = process_inner

.mutex_list

.iter()

.enumerate()

.find(|(_, item)| item.is_none())

.map(|(id, _)| id)

{

process_inner.mutex_list[id] = mutex;

id as isize

} else {

process_inner.mutex_list.push(mutex);

process_inner.mutex_list.len() as isize - 1

}

}

这里将mutex设置为一个Spin或者Block的锁,之后遍历process中的mutex队列,将这个申请的mutex放入队列中。最后会返回锁的ID。

pub fn sys_mutex_lock(mutex_id: usize) -> isize {

let process = current_process();

let process_inner = process.inner_exclusive_access();

let mutex = Arc::clone(process_inner.mutex_list[mutex_id].as_ref().unwrap());

drop(process_inner);

drop(process);

mutex.lock();

0

}

这里会对应的锁进行锁住,在锁被占用的情况,如果是spin的锁将直接调度下一个进程,如果是block锁,会将对应的线程加入到锁的阻塞队列中。

pub fn sys_mutex_unlock(mutex_id: usize) -> isize {

let process = current_process();

let process_inner = process.inner_exclusive_access();

let mutex = Arc::clone(process_inner.mutex_list[mutex_id].as_ref().unwrap());

drop(process_inner);

drop(process);

mutex.unlock();

}

对于信号量,我们需要up和down函数,来对统计量进行控制。

pub fn sys_semaphore_create(res_count: usize) -> isize {

let process = current_process();

let mut process_inner = process.inner_exclusive_access();

let id = if let Some(id) = process_inner

.semaphore_list

.iter()

.enumerate()

.find(|(_, item)| item.is_none())

.map(|(id, _)| id)

{

process_inner.semaphore_list[id] = Some(Arc::new(Semaphore::new(res_count)));

id

} else {

process_inner

.semaphore_list

.push(Some(Arc::new(Semaphore::new(res_count))));

process_inner.semaphore_list.len() - 1

};

id as isize

}

pub fn sys_semaphore_up(sem_id: usize) -> isize {

let process = current_process();

let process_inner = process.inner_exclusive_access();

let sem = Arc::clone(process_inner.semaphore_list[sem_id].as_ref().unwrap());

drop(process_inner);

sem.up();

0

}

pub fn sys_semaphore_down(sem_id: usize) -> isize {

let process = current_process();

let process_inner = process.inner_exclusive_access();

let sem = Arc::clone(process_inner.semaphore_list[sem_id].as_ref().unwrap());

drop(process_inner);

sem.down();

0

}

信号量的创建过程和mutex类似,但是这里需要up和down两个函数对于count进行维护,一旦up 表示一类资源进行了释放,可以从队列中取出一个阻塞的任务进行执行。

pub fn sys_condvar_create() -> isize {

let process = current_process();

let mut process_inner = process.inner_exclusive_access();

let id = if let Some(id) = process_inner

.condvar_list

.iter()

.enumerate()

.find(|(_, item)| item.is_none())

.map(|(id, _)| id)

{

process_inner.condvar_list[id] = Some(Arc::new(Condvar::new()));

id

} else {

process_inner

.condvar_list

.push(Some(Arc::new(Condvar::new())));

process_inner.condvar_list.len() - 1

};

id as isize

}

pub fn sys_condvar_signal(condvar_id: usize) -> isize {

let process = current_process();

let process_inner = process.inner_exclusive_access();

let condvar = Arc::clone(process_inner.condvar_list[condvar_id].as_ref().unwrap());

drop(process_inner);

condvar.signal();

0

}

pub fn sys_condvar_wait(condvar_id: usize, mutex_id: usize) -> isize {

let process = current_process();

let process_inner = process.inner_exclusive_access();

let condvar = Arc::clone(process_inner.condvar_list[condvar_id].as_ref().unwrap());

let mutex = Arc::clone(process_inner.mutex_list[mutex_id].as_ref().unwrap());

drop(process_inner);

condvar.wait(mutex);

0

}

对于条件变量,创建过程一样,需要在进程的条件变量队列中进行申请。这里分析signal和wait,可以发现这里不同的一点是wait需要传入一个mutex_id, 这里wait是会将这个锁打开,让后将线程控制块加入到队列中。一旦一个signal发生,会将一个线程控制块取出,之后线程控制块的代码从sys_condvar_wait代码中恢复,将锁再次锁住。

这样使用一个条件变量时,就必须先使用wait。等待一个事件进行signal。